September 20, 2022 · 4 min read ·

Ingesting and parsing custom logs using Google Cloud’s Ops Agent

(Originally authored by Pablo Filippi (Cloud Infrastructure Architect) on Medium)

Google Cloud’s Ops Agent, as part of Cloud Operations, is in charge of collecting logs and metrics from your Compute Engine instances. It uses Fluent Bit for logs and the OpenTelemetry Collector for metrics. In this article, we will deep dive on the logging piece, focusing on how to parse logs using regular expressions.

Motivation

So, why would you want to parse logs at the instance level instead of doing the parsing and manipulation on Logs Explorer? Well, there are specific use cases where you may not want certain information on the logs to leave the VM — for example, logs with confidential information. In such cases, with Ops Agent, we are able to parse logs and send only the pieces of information we want to Cloud Operations.

Cloud Logging

For the purpose of this tutorial, we will suppose we would like to monitor our application called my-app. For this, we will configure Ops Agent to push the logfile /var/log/my-app.log to Logs Explorer. However, we don’t want to push the entire content of the log since it has some PII data. Our example logfile looks like this:

message: Transaction # 1234 has been completed successfully - credit card number: 1111-2222-3333-4444.

message: Transaction # 5678 has failed - social security number: 444-55-7777.

Hence, we will configure a regular expression in order to exclude sending some pieces of information.

Of course, for the given scenario, you could also use Google Cloud’s Cloud Data Loss Prevention to discover and protect PII data. It’s a very powerful serverless service that integrates seamlessly with other Google Cloud products. Also, it provides features such as data masking, de-identification mechanisms and more. Consider using DLP for big amounts of data and if they reside in different sources other that Compute Engine VMs, such as BigQuery, Cloud Storage or Datastore. Also, if you need more complex data manipulation such as obfuscation, masking and classification.

Getting Started

Firstly, we will need to get Ops Agent installed on the VM where our app is running given that this agent does not come preinstalled on Compute Engine instances. Our instance is running Debian, other Linux distributions (and Windows) are supported as well. You also have the option to batch install the agent on a fleet of VMs either using agent policies or automation tools.

But for the sake of this tutorial, let’s go ahead and SSH to your VM. Once on your home directory, run the following commands to get the agent installed:

curl -sSO https://dl.google.com/cloudagents/add-google-cloud-ops-agent-repo.sh

sudo bash add-google-cloud-ops-agent-repo.sh --also-install

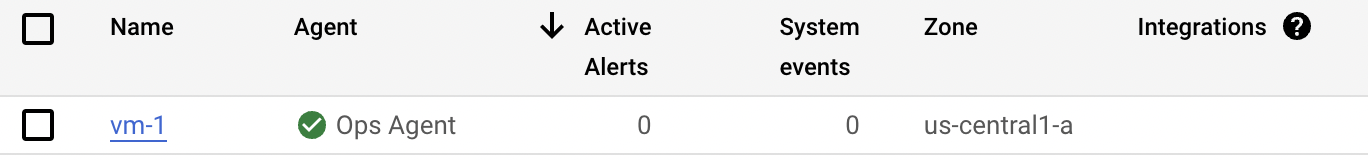

You can confirm the agent was installed correctly going to Monitoring on Google Cloud Console.

Check the status of the agent going to Monitoring — Dashboards — VM Instances

Customizing Ops Agent

Next, we will customize the agent to ingest our application log. Edit the agent configuration file /etc/google-cloud-ops-agent/config.yaml and add the following section:

logging:

receivers:

my-app-receiver:

type: files

include_paths:

- /var/log/my-app.log

record_log_file_path: true

processors:

my-app-processor:

type: parse_regex

regex: "^message:(?<my_app_logfile>.*) -"

service:

pipelines:

default_pipeline:

receivers: [my-app-receiver]

processors: [my-app-processor]

Bear in mind that Fluent Bit and Ops Agent use Ruby regular expressions, as explained here. To test your regex’s, you can visit https://rubular.com/

We can now restart the agent for the changes to take effect:

sudo service google-cloud-ops-agent restart

On Logs Explorer, we will now see the log’s content without PII data:

Logs Explorer

Recap

Recap

Following this tutorial, we were able to not only use Ops Agent to ingest custom logs into Logs Explorer but also filter the data that we were ingesting, in this case, sensitive information. This approach is useful for small amounts of data produced from Compute Engine instances. However, if you need to protect your data at a global scale or if it resides in other GCP products such as BigQuery, you can certainly take a look at GCP DLP, as mentioned earlier, which provides a robust framework to deal with PII data. It’s also worth mentioning that Cloud Logging costs are minimal compared with DLP. You can get the pricing details here and here. Moreover, remember that you can use this functionality not only for confidential information but any kind of information residing on your VMs’ logs. Either if you want to protect sensitive data, reduce costs or remove irrelevant information, this feature can be of great help. Hope you found this article helpful. Thanks for reading!

Zencore was started in 2021 by former senior Google Cloud engineers, solution architects and developers. The consulting and services firm is focused on solving business challenges with Cloud Technology and Tools backed by a world class development, engineering, and data science team.